The Nondeterministic Hot Dog Problem

Plus cloud native databases, data lineage, and burrito command line tools

TL;DR

Looking at data science from the operator perspective, change is still entirely too hard to manage.

What I'm Writing

The long thread on cloud native data systems continues!

In the Buffer

Even more cloud native database stuff

Purdue is launching a course on Cloud Native Databases.

The Search for a Cloud Native Database: I'm not sure I fully accept some of the premises in this article, but it's certainly a concise analysis of the problem. Automating deployment of a complex database like Cassandra isn't enough - it also needs to be managed in a cloud native way. I ran through several of Jeff Carpenter's recent work on this topic, another good one is his writeup on maturity models for cloud native DBs.

A fascinating conversation with Julian Le Dem about data lineage, OpenLineage, Le Dem's career experiences, and more.

The Modest Webapp

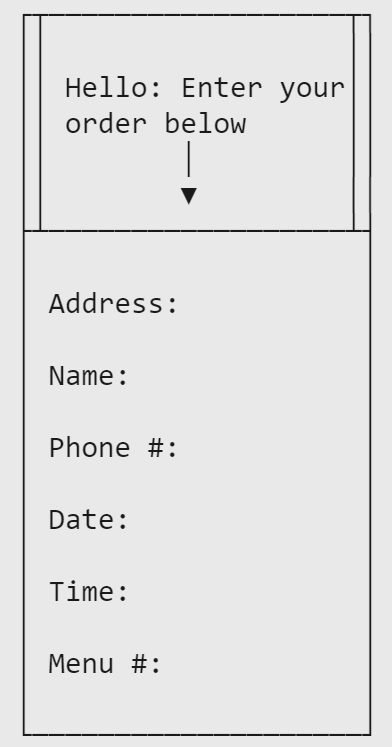

Let's imagine a simple application. It's straightforward, it's reliable, and it can serve lots of traffic. Our fictional company, Scones Unlimited, use this webapp as an internal tool for ordering lunches from the nearby designer hot dog stand. They access the app from the browser. It looks like this:

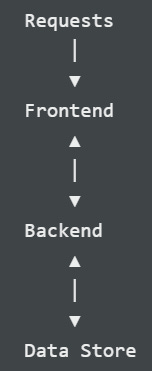

The frontend user interface that renders this form in the browser is written in Ficus because a developer felt like it that day. It gets shoved into a Caddyserver instance as an optimized, compressed, static bundle of text files. Caddy runs on a dedicated machine network-isolated from everything but the incoming traffic and the backend server. Speaking of the backend server, Caddy is configured to send anything with /api/ in the address to the backend. It's basically all just form data from the form pictured above.

The backend server is just FastAPI. It applies the hot dog business logic. Whenever application state needs to persist (e.g. to keep track of order history) FastAPI calls the data store. The backend server runs on its own dedicated instance isolated in yet another network segment that can only talk to Caddy or the data store.

The data store is Minio, running on, you guessed it, its own dedicated machine in a network segment that can only talk to the FastAPI machine.

Besides some ahem esoteric choices for the components, this is an entirely boring project fulfilling a mundane need with boring 3-tier infrastructure. It does its job day after day. It is noble. It is basically the only process that runs on these machines other than a handful of agents the Scones Unlimited Platform Engineering group uses for stuff like deploys, monitoring, logging, security, secrets management, and so on.

Hot Dog Problem Space

Eventually, an enterprising data scientist decides to add a feature to the hot dog ordering app. The feature observes the users and continuously tries to update its model so that they are are served recommendations about their hot dogs. In the normal way that changes are made to the app:

The data scientist added their code changes on GitHub and sent a request for the changes to merge.

Another developer reviewed the changes and approved them.

The data scientist saw that the changes were approved, and hit the big green "Merge" button.

Their code merged into the project.

GitHub and one of the agents running on the dedicated machines worked together to package and deploy a new version of the app.

This workflow has been great for the team. Changes can merge pretty quickly, and the code quality remains pretty good because reviewers are on the team too and care about the project. The data scientist is really happy that her code is in production now, exit stage right.

Unbeknownst to everyone so far, a prankster was lurking in their ranks! One of the lead developers on the cybersecurity team at Scones Unlimited was sick and tired of finding their favorite hot dog, the Blue Cheese and Potato Chip Complete (#8), completely sold out. These artisanal cheese-and-starch covered protein tubes were too popular! When the recommender system shipped, they saw an opening, and hatched a dastardly plan to game the system.

First, they tested various ways of sending fake signals to the recommender. It turned out that you could place and then instantly cancel them over and over again. The only limitation was that you can't place orders when the hot dog stand is closed.

Next, they confirmed that the fake signals impact your recommendations.

With the help of some friends and a small(er) scale blast of cancellations, they confirmed that other users would have their recommendations impacted by the signals.

Finally, they wrote a little script that flooded the recommender system with random orders. The only exception to the randomness? You guessed it, BCPCC #8 was excluded.

This devious script caused the recommender system to think that the proportion of users ordering #8 was approaching zero, and so it stopped recommending it. Lo and behold, our saboteur depressed just enough demand for the doggies that they could reliably order them again. Mischief managed!

So what?

Before the machine learning model was added to the system, there were only a few readily identifiable participants who could change our application. When they made changes, they did it through git and their changes were reviewed. The various requests were timestamped, authorship was recorded, and the deployment of the changes was logged.

Once the machine learning system was added to the application, the universe of participants who can make changes expanded quite a bit. At Scones Unlimited this manifested in a fairly benign way, but consider:

Bias creeping in from a homogenous pool of data labelers working in a data annotation service

Threat actors using advanced cyberattacks to poison a machine learning system or extract its training data

Business system operators changing a data input procedure without being aware of the downstream use of their data in a machine learning system

All of these are instances of ways that machine learning breaks our traditional views of change management and software determinism. Software can be (mostly) deterministic. It can be tested, or even formally verified, with proofs that its behavior can only part of an enumerated set of outcomes. Machine learning systems are probabilistic so they can have a continuous, constrained action space. **From just this fact the system cybersecurity and change management needs special consideration.

A variety of tools are working to address this problem, but none of them obviate it. MLFlow, DVC and CML, Activeloop Hub, DataHub, and Pachyderm are all examples of this. The important thing to remember is that using these tools can improve your workflows on their own, yes, but connecting them to the security, change management, and automations - to your operations - is sometimes the most valuable part.

Thanks for reading! If you liked this story of Scones Unlimited, I’m Charles Landau and you can: